FSI console has a pretty small font size by default. It is really uncomfortable to share screen with projector. Source code in FSI is always small and hard to read. Never thought (until today) that I can configure font, color, font size and etc. In fact, it is very easy to do:

Blog

Stanford CoreNLP is available on NuGet for F#/C# devs

Update (2014, January 3): Links and/or samples in this post might be outdated. The latest version of samples are available on new Stanford.NLP.NET site.

Stanford CoreNLP provides a set of natural language analysis tools which can take raw English language text input and give the base forms of words, their parts of speech, whether they are names of companies, people, etc., normalize dates, times, and numeric quantities, and mark up the structure of sentences in terms of phrases and word dependencies, and indicate which noun phrases refer to the same entities. Stanford CoreNLP is an integrated framework, which make it very easy to apply a bunch of language analysis tools to a piece of text. Starting from plain text, you can run all the tools on it with just two lines of code. Its analyses provide the foundational building blocks for higher-level and domain-specific text understanding applications.

Stanford CoreNLP integrates all Stanford NLP tools, including the part-of-speech (POS) tagger, the named entity recognizer (NER), the parser, and the coreference resolution system, and provides model files for analysis of English. The goal of this project is to enable people to quickly and painlessly get complete linguistic annotations of natural language texts. It is designed to be highly flexible and extensible. With a single option you can change which tools should be enabled and which should be disabled.

Stanford CoreNLP is here and available on NuGet. It is probably the most powerful package from whole The Stanford NLP Group software packages. Please, read usage overview on Stanford CoreNLP home page to understand what it can do, how you can configure an annotation pipeline, what steps are available for you, what models you need to have and so on.

I want to say thank you to Anonymous 😉 and @OneFrameLink for their contribution and stimulating me to finish this work.

Please follow next steps to get started:

- Install-Package Stanford.NLP.CoreNLP

- Download models from The Stanford NLP Group site.

- Extract models from stanford-corenlp-3.2.0-models.jar and remember new folder location. (Unzip archive)

- You are ready to start.

Before using Stanford CoreNLP, we need to define and specify annotation pipeline. For example, annotators = tokenize, ssplit, pos, lemma, ner, parse, dcoref.

The next thing we need to do is to create StanfordCoreNLP pipeline. But to instantiate a pipeline, we need to specify all required properties or at least paths to all models used by pipeline that are specified in annotators string. Before starting samples, let’s define some helper function that will be used across all source code pieces: jarRoot is a path to folder where we extracted files from stanford-corenlp-3.2.0-models.jar; modelsRoot is a path to folder with all models files; ‘!’ is overloaded operator that converts model name to relative path to the model file.

let (@@) a b = System.IO.Path.Combine(a,b) let jarRoot = __SOURCE_DIRECTORY__ @@ @"..\..\temp\stanford-corenlp-full-2013-06-20\stanford-corenlp-3.2.0-models\" let modelsRoot = jarRoot @@ @"edu\stanford\nlp\models\" let (!) path = modelsRoot @@ path

Now we are ready to instantiate the pipeline, but we need to do a small trick. Pipeline is configured to use default model files (for simplicity) and all paths are specified relatively to the root of stanford-corenlp-3.2.0-models.jar. To make things easier, we can temporary change current directory to the jarRoot, instantiate a pipeline and then change current directory back. This trick helps us dramatically decrease the number of code lines.

let props = Properties()

props.setProperty("annotators","tokenize, ssplit, pos, lemma, ner, parse, dcoref") |> ignore

props.setProperty("sutime.binders","0") |> ignore

let curDir = System.Environment.CurrentDirectory

System.IO.Directory.SetCurrentDirectory(jarRoot)

let pipeline = StanfordCoreNLP(props)

System.IO.Directory.SetCurrentDirectory(curDir)

However, you do not have to do it. You can configure all models manually. The number of properties (especially paths to models) that you need to specify depends on the annotators value. Let’s assume for a moment that we are in Java world and we want to configure our pipeline in a custom way. Especially for this case, stanford-corenlp-3.2.0-models.jar contains StanfordCoreNLP.properties (you can find it in the folder with extracted files), where you can specify new property values out of code. Most of properties that we need to use for configuration are already mentioned in this file and you can easily understand what it what. But it is not enough to get it work, also you need to look into source code of Stanford CoreNLP. By the way, some days ago Stanford was moved CoreNLP source code into GitHub – now it is much easier to browse it. Default paths to the models are specified in DefaultPaths.java file, property keys are listed in Constants.java file and information about which path match to which property name is contained in Dictionaries.java. Thus, you are able to dive deeper into pipeline configuration and do whatever you want. For lazy people I already have a working sample.

let props = Properties()

let (<==) key value = props.setProperty(key, value) |> ignore

"annotators" <== "tokenize, ssplit, pos, lemma, ner, parse, dcoref"

"pos.model" <== ! @"pos-tagger\english-bidirectional\english-bidirectional-distsim.tagger"

"ner.model" <== ! @"ner\english.all.3class.distsim.crf.ser.gz"

"parse.model" <== ! @"lexparser\englishPCFG.ser.gz"

"dcoref.demonym" <== ! @"dcoref\demonyms.txt"

"dcoref.states" <== ! @"dcoref\state-abbreviations.txt"

"dcoref.animate" <== ! @"dcoref\animate.unigrams.txt"

"dcoref.inanimate" <== ! @"dcoref\inanimate.unigrams.txt"

"dcoref.male" <== ! @"dcoref\male.unigrams.txt"

"dcoref.neutral" <== ! @"dcoref\neutral.unigrams.txt"

"dcoref.female" <== ! @"dcoref\female.unigrams.txt"

"dcoref.plural" <== ! @"dcoref\plural.unigrams.txt"

"dcoref.singular" <== ! @"dcoref\singular.unigrams.txt"

"dcoref.countries" <== ! @"dcoref\countries"

"dcoref.extra.gender" <== ! @"dcoref\namegender.combine.txt"

"dcoref.states.provinces" <== ! @"dcoref\statesandprovinces"

"dcoref.singleton.predictor"<== ! @"dcoref\singleton.predictor.ser"

let sutimeRules =

[| ! @"sutime\defs.sutime.txt";

! @"sutime\english.holidays.sutime.txt";

! @"sutime\english.sutime.txt" |]

|> String.concat ","

"sutime.rules" <== sutimeRules

"sutime.binders" <== "0"

let pipeline = StanfordCoreNLP(props)

As you see, this option is much longer and harder to do. I recommend to use the first one, especially if you do not need to change the default configuration.

And now the fun part. Everything else is pretty easy: we create an annotation from your text, path it through the pipeline and interpret the results.

let text = "Kosgi Santosh sent an email to Stanford University. He didn't get a reply."; let annotation = Annotation(text) pipeline.annotate(annotation) use stream = new ByteArrayOutputStream() pipeline.prettyPrint(annotation, new PrintWriter(stream)) printfn "%O" (stream.toString())

Certainly, you can extract all processing results from annotated test.

let customAnnotationPrint (annotation:Annotation) =

printfn "-------------"

printfn "Custom print:"

printfn "-------------"

let sentences = annotation.get(CoreAnnotations.SentencesAnnotation().getClass()) :?> java.util.ArrayList

for sentence in sentences |> Seq.cast<CoreMap> do

printfn "\n\nSentence : '%O'" sentence

let tokens = sentence.get(CoreAnnotations.TokensAnnotation().getClass()) :?> java.util.ArrayList

for token in (tokens |> Seq.cast<CoreLabel>) do

let word = token.get(CoreAnnotations.TextAnnotation().getClass())

let pos = token.get(CoreAnnotations.PartOfSpeechAnnotation().getClass())

let ner = token.get(CoreAnnotations.NamedEntityTagAnnotation().getClass())

printfn "%O \t[pos=%O; ner=%O]" word pos ner

printfn "\nTree:"

let tree = sentence.get(TreeCoreAnnotations.TreeAnnotation().getClass()) :?> Tree

use stream = new ByteArrayOutputStream()

tree.pennPrint(new PrintWriter(stream))

printfn "The first sentence parsed is:\n %O" (stream.toString())

printfn "\nDependencies:"

let deps = sentence.get(SemanticGraphCoreAnnotations.CollapsedDependenciesAnnotation().getClass()) :?> SemanticGraph

for edge in deps.edgeListSorted().toArray() |> Seq.cast<SemanticGraphEdge> do

let gov = edge.getGovernor()

let dep = edge.getDependent()

printfn "%O(%s-%d,%s-%d)"

(edge.getRelation())

(gov.word()) (gov.index())

(dep.word()) (dep.index())

The full code sample is available on GutHub, if you run it, you will see the following result:

Sentence #1 (9 tokens):

Kosgi Santosh sent an email to Stanford University.

[Text=Kosgi CharacterOffsetBegin=0 CharacterOffsetEnd=5 PartOfSpeech=NNP Lemma=Kosgi NamedEntityTag=PERSON] [Text=Santosh CharacterOffsetBegin=6 CharacterOffsetEnd=13 PartOfSpeech=NNP Lemma=Santosh NamedEntityTag=PERSON] [Text=sent CharacterOffsetBegin=14 CharacterOffsetEnd=18 PartOfSpeech=VBD Lemma=send NamedEntityTag=O] [Text=an CharacterOffsetBegin=19 CharacterOffsetEnd=21 PartOfSpeech=DT Lemma=a NamedEntityTag=O] [Text=email CharacterOffsetBegin=22 CharacterOffsetEnd=27 PartOfSpeech=NN Lemma=email NamedEntityTag=O] [Text=to CharacterOffsetBegin=28 CharacterOffsetEnd=30 PartOfSpeech=TO Lemma=to NamedEntityTag=O] [Text=Stanford CharacterOffsetBegin=31 CharacterOffsetEnd=39 PartOfSpeech=NNP Lemma=Stanford NamedEntityTag=ORGANIZATION] [Text=University CharacterOffsetBegin=40 CharacterOffsetEnd=50 PartOfSpeech=NNP Lemma=University NamedEntityTag=ORGANIZATION] [Text=. CharacterOffsetBegin=50 CharacterOffsetEnd=51 PartOfSpeech=. Lemma=. NamedEntityTag=O]

(ROOT

(S

(NP (NNP Kosgi) (NNP Santosh))

(VP (VBD sent)

(NP (DT an) (NN email))

(PP (TO to)

(NP (NNP Stanford) (NNP University))))

(. .)))nn(Santosh-2, Kosgi-1)

nsubj(sent-3, Santosh-2)

root(ROOT-0, sent-3)

det(email-5, an-4)

dobj(sent-3, email-5)

nn(University-8, Stanford-7)

prep_to(sent-3, University-8)Sentence #2 (7 tokens):

He didn’t get a reply.

[Text=He CharacterOffsetBegin=52 CharacterOffsetEnd=54 PartOfSpeech=PRP Lemma=he NamedEntityTag=O] [Text=did CharacterOffsetBegin=55 CharacterOffsetEnd=58 PartOfSpeech=VBD Lemma=do NamedEntityTag=O] [Text=n’t CharacterOffsetBegin=58 CharacterOffsetEnd=61 PartOfSpeech=RB Lemma=not NamedEntityTag=O] [Text=get CharacterOffsetBegin=62 CharacterOffsetEnd=65 PartOfSpeech=VB Lemma=get NamedEntityTag=O] [Text=a CharacterOffsetBegin=66 CharacterOffsetEnd=67 PartOfSpeech=DT Lemma=a NamedEntityTag=O] [Text=reply CharacterOffsetBegin=68 CharacterOffsetEnd=73 PartOfSpeech=NN Lemma=reply NamedEntityTag=O] [Text=. CharacterOffsetBegin=73 CharacterOffsetEnd=74 PartOfSpeech=. Lemma=. NamedEntityTag=O]

(ROOT

(S

(NP (PRP He))

(VP (VBD did) (RB n’t)

(VP (VB get)

(NP (DT a) (NN reply))))

(. .)))nsubj(get-4, He-1)

aux(get-4, did-2)

neg(get-4, n’t-3)

root(ROOT-0, get-4)

det(reply-6, a-5)

dobj(get-4, reply-6)Coreference set:

(2,1,[1,2)) -> (1,2,[1,3)), that is: “He” -> “Kosgi Santosh”

C# Sample

C# samples are also available on GitHub.

Stanford Temporal Tagger(SUTime)

SUTime is a library for recognizing and normalizing time expressions. SUTime is available as part of the Stanford CoreNLP pipeline and can be used to annotate documents with temporal information. It is a deterministic rule-based system designed for extensibility.

There is one more useful thing that we can do with CoreNLP – time extraction. The way that we use CoreNLP is pretty similar to the previous sample. Firstly, we create an annotation pipeline and add there all required annotators. (Notice that this sample also use the operator defined at the beginning of the post)

let pipeline = AnnotationPipeline()

pipeline.addAnnotator(PTBTokenizerAnnotator(false))

pipeline.addAnnotator(WordsToSentencesAnnotator(false))

let tagger = MaxentTagger(! @"pos-tagger\english-bidirectional\english-bidirectional-distsim.tagger")

pipeline.addAnnotator(POSTaggerAnnotator(tagger))

let sutimeRules =

[| ! @"sutime\defs.sutime.txt";

! @"sutime\english.holidays.sutime.txt";

! @"sutime\english.sutime.txt" |]

|> String.concat ","

let props = Properties()

props.setProperty("sutime.rules", sutimeRules ) |> ignore

props.setProperty("sutime.binders", "0") |> ignore

pipeline.addAnnotator(TimeAnnotator("sutime", props))

Now we are ready to annotate something. This part is also equal to the same one from the previous sample.

let text = "Three interesting dates are 18 Feb 1997, the 20th of july and 4 days from today." let annotation = Annotation(text) annotation.set(CoreAnnotations.DocDateAnnotation().getClass(), "2013-07-14") |> ignore pipeline.annotate(annotation)

And finally, we need to interpret annotating results.

printfn "%O\n" (annotation.get(CoreAnnotations.TextAnnotation().getClass()))

let timexAnnsAll = annotation.get(TimeAnnotations.TimexAnnotations().getClass()) :?> java.util.ArrayList

for cm in timexAnnsAll |> Seq.cast<CoreMap> do

let tokens = cm.get(CoreAnnotations.TokensAnnotation().getClass()) :?> java.util.List

let first = tokens.get(0)

let last = tokens.get(tokens.size() - 1)

let time = cm.get(TimeExpression.Annotation().getClass()) :?> TimeExpression

printfn "%A [from char offset '%A' to '%A'] --> %A"

cm first last (time.getTemporal())

The full code sample is available on GutHub, if you run it you will see the following result:

18 Feb 1997 [from char offset ’18’ to ‘1997’] –> 1997-2-18

the 20th of july [from char offset ‘the’ to ‘July’] –> XXXX-7-20

4 days from today [from char offset ‘4’ to ‘today’] –> THIS P1D OFFSET P4D

C# Sample

C# samples are also available on GitHub.

Conclusion

There is a pretty awesome library. I hope you enjoy it. Try it out right now!

There are some other more specific Stanford packages that are already available on NuGet:

FAST Search Server 2010 for SharePoint Versions

Talbott Crowell's Software Development Blog

Here is a table that contains a comprehensive list of FAST Search Server 2010 for SharePoint versions including RTM, cumulative updates (CU’s), and hotfixes. Please let me know if you find any errors or have a version not listed here by using the comments.

| Build | Release | Component | Information | Source (Link to Download) |

| 14.0.4763.1000 | RTM | FAST Search Server | Mark van Dijk | |

| 14.0.5128.5001 | October 2010 CU | FAST Search Server | KB2449730 | Mark van Dijk |

| 14.0.5136.5000 | February 2011 CU | FAST Search Server | KB2504136 | Mark van Dijk |

| 14.0.6029.1000 | Service Pack 1 | FAST Search Server | KB2460039 | Todd Klindt |

| 14.0.6109.5000 | August 2011 CU | FAST Search Server | KB2553040 | Todd Klindt |

| 14.0.6117.5002 | February 2012 CU | FAST Search Server | KB2597131 | Todd Klindt |

| 14.0.6120.5000 | April 2012 CU | FAST Search Server | KB2598329 | Todd Klindt |

| 14.0.6126.5000 | August 2012 CU | FAST Search Server | KB2687489 | Mark van Dijk |

| 14.0.6129.5000 | October 2012 CU | FAST Search Server | KB2760395 | Todd… |

View original post 245 more words

“F# Minsk : Getting started” was held

Wow, about 20 attendees!!! I really did not expect such a rush.

Thank you everyone, who joined us today! Welcome all of you at the next F# Minsk meetup.

These are the slides from our talks:

Dropbox for .NET developers

Some days ago, I was faced with the task of developing Dropbox connector that should be able to enumerate and download files from Dropbox. The ideal case for me is a wrapper library for .NET 3.5 with an ability to authorize in Dropbox without user interaction. This is a list of .NET libraries/components that are currently available:

- DropNet (NuGet package)

- SharpBox (NuGet package)

- Spring.NET Social Dropbox

- Xamarin Dropbox Sync component.

Sprint.NET and Xamarin component are not my options for now. DropNet also does not fit my needs, because it is .NET 4+ only. But if your application is for .NET 4+, then DropNet should be the best choice for you. I chose SharpBox, it looks like a dead project – no commits since 2011, but nevertheless the latest version is available on NuGet.

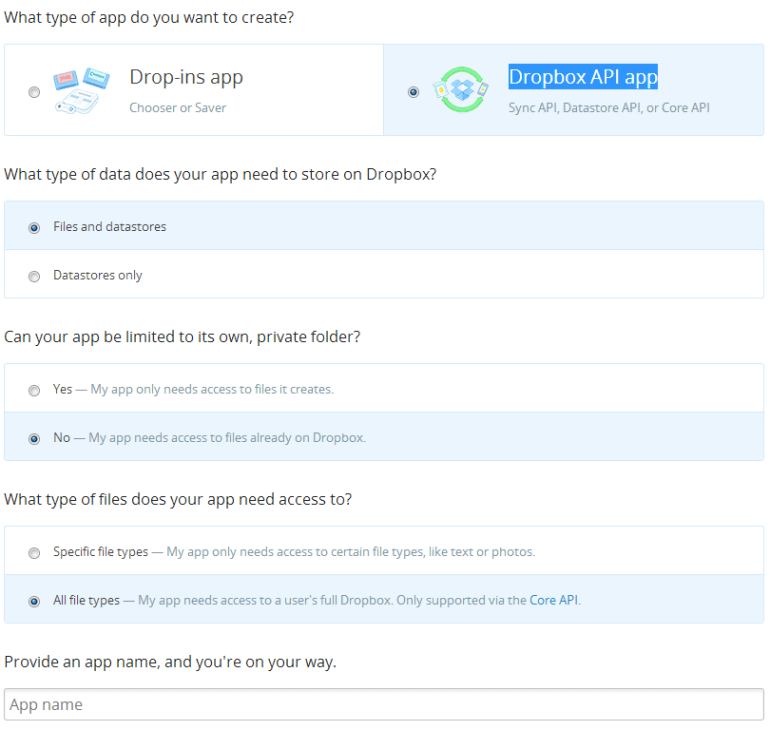

At the beginning, you need to go to Dropbox App Console and create a new app. Click on “Create app” button and answer to the questions like in the picture below.

When you finish all these steps, you will get an App key and App secret, please copy them somewhere – you will need them in future. Now we are ready to create our application. Let’s create a new F# project and add AppLimit.CloudComputing.SharpBox package from NuGet.

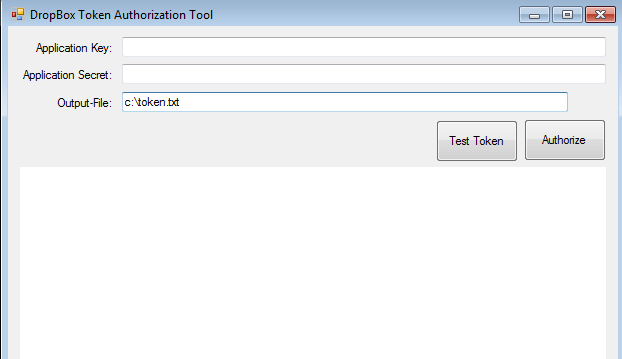

After package is downloaded, go to packages\AppLimit.CloudComputing.SharpBox.1.2.0.542\lib\net40-full folder, find and start DropBoxTokenIssuer.exe application.

Fill Application Key and Application Secret with values that you received during app creation, fill Output-File path with c:\token.txt and click “Authorize”. Wait some seconds(depends on your Internet connection) and follow the steps that will appear in browser control on the form – you will need to authorize in Dropbox with your Dropbox account and grant access to your files for your app. When file with your token will be created, you can click on “Test Token” button to make sure that it is correct.

Using token file, you are able to work with Dropbox files without direct user interaction, as shown in the sample below:

open System.IO

open AppLimit.CloudComputing.SharpBox

[<EntryPoint>]

let main argv =

let dropBoxStorage = new CloudStorage()

let dropBoxConfig = CloudStorage.GetCloudConfigurationEasy(nSupportedCloudConfigurations.DropBox)

// load a valid security token from file

use fs = File.Open(@"C:\token.txt", FileMode.Open, FileAccess.Read, FileShare.None)

let accessToken = dropBoxStorage.DeserializeSecurityToken(fs)

// open the connection

let storageToken = dropBoxStorage.Open(dropBoxConfig, accessToken);

for folder in dropBoxStorage.GetRoot() do

printfn "%s" (folder.Name)

dropBoxStorage.Close()

0

Stanford Word Segmenter is available on NuGet

Update (2014, January 3): Links and/or samples in this post might be outdated. The latest version of samples are available on new Stanford.NLP.NET site.

Tokenization of raw text is a standard pre-processing step for many NLP tasks. For English, tokenization usually involves punctuation splitting and separation of some affixes like possessives. Other languages require more extensive token pre-processing, which is usually called segmentation.

The Stanford Word Segmenter currently supports Arabic and Chinese. The provided segmentation schemes have been found to work well for a variety of applications.

One more tool from Stanford NLP Software Package become ready on NuGet today. It is a Stanford Word Segmenter. This is a fourth one Stanford NuGet package published by me, previous ones were a “Stanford Parser“, “Stanford Named Entity Recognizer (NER)” and “Stanford Log-linear Part-Of-Speech Tagger“. Please follow next steps to get started:

- Install-Package Stanford.NLP.Segmenter

- Download models from The Stanford NLP Group site.

- Extract models from ’data‘ folder.

- You are ready to start.

F# Sample

For more details see source code on GitHub.

open java.util

open edu.stanford.nlp.ie.crf

[<EntryPoint>]

let main argv =

if (argv.Length <> 1) then

printf "usage: StanfordSegmenter.Csharp.Samples.exe filename"

else

let props = Properties();

props.setProperty("sighanCorporaDict", @"..\..\..\..\temp\stanford-segmenter-2013-06-20\data") |> ignore

props.setProperty("serDictionary", @"..\..\..\..\temp\stanford-segmenter-2013-06-20\data\dict-chris6.ser.gz") |> ignore

props.setProperty("testFile", argv.[0]) |> ignore

props.setProperty("inputEncoding", "UTF-8") |> ignore

props.setProperty("sighanPostProcessing", "true") |> ignore

let segmenter = CRFClassifier(props)

segmenter.loadClassifierNoExceptions(@"..\..\..\..\temp\stanford-segmenter-2013-06-20\data\ctb.gz", props)

segmenter.classifyAndWriteAnswers(argv.[0])

0

C# Sample

For more details see source code on GitHub.

using java.util;

using edu.stanford.nlp.ie.crf;

namespace StanfordSegmenter.Csharp.Samples

{

class Program

{

static void Main(string[] args)

{

if (args.Length != 1)

{

System.Console.WriteLine("usage: StanfordSegmenter.Csharp.Samples.exe filename");

return;

}

var props = new Properties();

props.setProperty("sighanCorporaDict", @"..\..\..\..\temp\stanford-segmenter-2013-06-20\data");

props.setProperty("serDictionary", @"..\..\..\..\temp\stanford-segmenter-2013-06-20\data\dict-chris6.ser.gz");

props.setProperty("testFile", args[0]);

props.setProperty("inputEncoding", "UTF-8");

props.setProperty("sighanPostProcessing", "true");

var segmenter = new CRFClassifier(props);

segmenter.loadClassifierNoExceptions(@"..\..\..\..\temp\stanford-segmenter-2013-06-20\data\ctb.gz", props);

segmenter.classifyAndWriteAnswers(args[0]);

}

}

}

MSR-SPLAT Overview for F# (.NET NLP)

Some weeks ago, Microsoft Research announced NLP toolkit called MSR SPLAT. It is time to play with it and take a look what it can do.

Statistical Parsing and Linguistic Analysis Toolkit is a linguistic analysis toolkit. Its main goal is to allow easy access to the linguistic analysis tools produced by the Natural Language Processing group at Microsoft Research. The tools include both traditional linguistic analysis tools such as part-of-speech taggers and parsers, and more recent developments, such as sentiment analysis (identifying whether a particular of text has positive or negative sentiment towards its focus)

SPLAT has a nice Silverlight DEMO app that lets you try all available functionality.

SPLAT also has WCF and RESTful endpoints, but if you want to use them, you need to request an access key(please email to Pallavi Choudhury). For more details, please read an overview article “MSR SPLAT, a language analysis toolkit“.

Important links:

- MSR SPLAT official project page.

- Silverlight DEMO app deployed to Windows Azure.

- Article: “MSR SPLAT, a language analysis toolkit“

Test Drive

I have received my GUID with example of using Json service from C# that you can find below.

private static void CallSplatJsonService()

{

var requestStr = String.Format("http://msrsplat.cloudapp.net/SplatServiceJson.svc/Analyzers?language={0}&json=x", "en");

string language = "en";

string input = "I live in Seattle";

string analyzerList = "POS_tags,Tokens";

string appId = "XXXXXXXX-XXXX-XXXX-XXXX-XXXXXXXXXXXX";

string requestAnanlyse = String.Format("http://msrsplat.cloudapp.net/SplatServiceJson.svc/Analyze?language={0}&analyzers={1}&appId={2}&json=x&input={3}",

language, analyzerList, appId, input);

var request = WebRequest.Create(requestAnanlyse);

request.ContentType = "application.json; charset=utf-8";

request.Method = "GET";

string postData = String.Format("/{0}?language={1}&json=x","Analyzers", "en");

using(Stream s = request.GetResponse().GetResponseStream())

{

using(StreamReader sr = new StreamReader(s))

{

var jsonData = sr.ReadToEnd();

Console.WriteLine(jsonData);

}

}

}

In following samples, I used WCF endpoint since WsdlService Type Provider can dramatically simplify access to the service.

#r "FSharp.Data.TypeProviders.dll" #r "System.ServiceModel.dll" #r "System.Runtime.Serialization.dll" open System open Microsoft.FSharp.Data.TypeProviders type MSRSPLAT = WsdlService<"http://msrsplat.cloudapp.net/SplatService.svc?wsdl"> let splat = MSRSPLAT.GetBasicHttpBinding_ISplatService()

In the first call we ask the SPLAT to return list of supported languages splat.Languages() and you will see [|”en”; “bg”|] (English and Bulgarian). The mystical Bulgaria… I do not know why, but NLP guys like Bulgaria. There is something special for NLP :).

The Stanford NLP Group has relocated in Bulgaria … temporarily.

— Stanford NLP Group (@stanfordnlp) August 3, 2013

The next call is splat.Analyzers(“en”) that returns list of all analyzers that are available for English language (All of them are available from DEMO app)

- “Base Forms-LexToDeriv-DerivFormsC#”

- “Chunker-SpecializedChunks-ChunkerC++”

- “Constituency_Forest-PennTreebank3-SplitMerge”

- “Constituency_Tree-PennTreebank3-SplitMerge”

- “Constituency_Tree_Score-Score-SplitMerge”

- “CoRef-PennTreebank3-UsingMentionsAndHeadFinder”

- “Dependency_Tree-PennTreebank3-ConvertFromConstTree”

- “Katakana_Transliterator-Katakana_to_English-Perceptron”

- “Lemmas-LexToLemma-LemmatizerC#”

- “Named_Entities-CONLL-CRF”

- “POS_Tags-PennTreebank3-cmm”

- “Semantic_Roles-PropBank-kristout”

- “Semantic_Roles_Scores-PropBank-kristout”

- “Sentiment-PosNeg-MaxEntClassifier”

- “Stemmer-PorterStemmer-PorterStemmerC#”

- “Tokens-PennTreebank3-regexes”

- “Triples-SimpleTriples-ExtractFromDeptree”

This is a list of full names of analyzers that are available for now. The part of the analyzer’s name that you have to pass to the service to perform corresponding analysis is highlighted in bold. To perform the analysis, you need to have an access guid and pass it as an email to splat.Analyze method. It is probably a typo, but as it is. Let’s call all analyzers on the one of our favorite sentences “All your types are belong to us” and look at the result.

let appId = "XXXXXXXX-XXXX-XXXX-XXXX-XXXXXXXXXXXX"

let analyzers = String.Join(",", splat.Analyzers("en")

|> Array.map (fun s -> s.Split([|'-'|]).[0]))

let text = "All your types are belong to us"

let bag = splat.Analyze("en", analyzers, text, appId)

bag.Analyses

The result is

[["0-all","1-you","2-types","3-are","4-belong","5-to","6-us"]]";

"["[NP All your types] [VP are] [VP belong] [PP to] [NP us] \u000a"]";

"["@@All your types are belong to us\u000d\u000a0\u0009G_DT..."]";

"["(TOP (S (NP (PDT All) (PRP$ your) (NNS types)) (VP (VBP are) (VP (VB belong) (PP (TO to) (NP (PRP us)))))))"]";

"[-2.2476917857427452]";

"[[{"LengthInTokens":3,"Sentence":0,"StartTokenOffset":0}],[{"LengthInTokens":1,"Sentence":0,"StartTokenOffset":1}],[{"LengthInTokens":1,"Sentence":0,"StartTokenOffset":6}]]";

"[[{"Parent":3,"Tag":"PDT","Word":"All"},{"Parent":3,"Tag":"PRP$","Word":"your"},{"Parent":4,"Tag":"NNS","Word":"types"},{"Parent":0,"Tag":"VBP","Word":"are"},{"Parent":4,"Tag":"VB","Word":"belong"},{"Parent":5,"Tag":"TO","Word":"to"},{"Parent":6,"Tag":"PRP","Word":"us"}]]";

"["14.50%: アリオータイプサレベロングタス","13.27%: オールユアタイプサレベロングタス","13.26%: アルユアタイプサレベロングタス","13.26%: アリオールタイプサレベロングタス","11.34%: アリオウルタイプサレベロングタス","7.81%: アルルユアタイプサレベロングタス","7.10%: アリアウータイプサレベロングタス","6.60%: アリアウルタイプサレベロングタス","6.46%: アリーオータイプサレベロングタス","6.40%: アリオータイプサリベロングタス"]";

"[["All","your","type","are","belong","to","us"]]";

"[{"Len":0,"Offset":0,"Tokens":[]}]";

"[["DT","PRP$","NNS","VBP","IN","TO","PRP"]]";

"[["4-4\/belong[A1=0-2\/All_your_types, A1=5-6\/to_us]"]]";

"[[-0.33393750773577313]]";

"{"Classification":"pos","Probability":0.59141720028208355}";

"[["All","your","type","ar","belong","to","us"]]";

"[{"Len":31,"Offset":0,"Tokens":[{"Len":3,"NormalizedToken":"All","Offset":0,"RawToken":"All"},{"Len":4,"NormalizedToken":"your","Offset":4,"RawToken":"your"},{"Len":5,"NormalizedToken":"types","Offset":9,"RawToken":"types"},{"Len":3,"NormalizedToken":"are","Offset":15,"RawToken":"are"},{"Len":6,"NormalizedToken":"belong","Offset":19,"RawToken":"belong"},{"Len":2,"NormalizedToken":"to","Offset":26,"RawToken":"to"},{"Len":2,"NormalizedToken":"us","Offset":29,"RawToken":"us"}]}]";

"[["are_belong_to(types, us)"]]"|]

As you see, service returns result as string[]. All result strings are readable for human eyes and formatted according to “NLP standards”, but some of them are really hard to parse programmatically. FSharp.Data and JSON Type Provider can help with strings that contain correct Json objects.

For example, if you need to use “Sentiment-PosNeg-MaxEntClassifier” analyzer in strongly typed way, then you can do it as follows:

#r @"..\packages\FSharp.Data.1.1.9\lib\net40\FSharp.Data.dll"

open FSharp.Data

type SentimentsProvider = JsonProvider<""" {"Classification":"pos","Probability":0.59141720028208355} """>

let bag2 = splat.Analyze("en", "Sentiment", "I love F#.", appId)

let sentiments = SentimentsProvider.Parse(bag2.Analyses.[0])

printfn "Class:'%s' Probability:'%M'"

(sentiments.Classification) (sentiments.Probability)

For analyzers like “Constituency_Tree-PennTreebank3-SplitMerge” you need to write custom parser that proceses bracket expression (“(TOP (S (NP (PDT All) (PRP$ your) (NNS types)) (VP (VBP are) (VP (VB belong) (PP (TO to) (NP (PRP us)))))))”) and builds a tree for you. If you are lazy to do it yourself (you should be so), you can download SilverlightSplatDemo.xap and decompile source code. All parsers are already implemented there for DEMO app. But this approach is not so easy as it should be.

Summary

MSR SPLAT looks like a really powerful and promising toolkit. I hope that it continues growing.

The only wish is an API improvement. I think there should be possible to use services in a strongly typed way. The easiest way is to add an ability to get all results as Json without any cnf forms and so on. Also it can be achieved by changing WCF service and exposing analysis results in a typed way instead of string[].

F# Type Providers: News from the battlefields

“All your types are belong to us”

Don Syme

This post is intended for F# developers, first of all, to show the big picture of The World of F# Type Providers. Here you can find the list of articles/posts about building type providers, list of existing type providers, which probably wait your help and list of open opportunities.

List of materials that can be useful if you want to create a new one:

- Tutorial: Creating a Type Provider (F#)

- Type Providers

- “Programming F# 3.0” by Chris Smith

- “F# for C# Developers” by Tao Liu

- F# Type Provider Template

- Building Type Providers – Part 1

- Self Note: Debug Type Provider

- How do I create an F# Type Provider that can be used from C#?

- F#3.0 – Strongly-Typed Language Support for Internet-Scale Information Sources

List of available type providers:

- Microsoft.FSharp.Data.TypeProviders

- Fsharpx

- AppSettings

- Excel

- Graph

- Machine

- Management

- Math

- Regex

- Xaml

- Xrm

- FSharp.Data

- F# 3.0 Sample Pack

- DGML

- Word

- Csv

- DataStore

- Hadoop/Hive/Hdfs

- HelloWorld

- Management

- MiniCvs

- Xrm

- FunScript

- FSharpRProvider

- FCell Type Provider

- Matlab-Type-Provider

- IKVM.TypeProvider

- PythonTypeProvider

- PowerShellTypeProvider

- CYOA(Choose Your Own Adventure type provider)

- WebSharperWithTypeProviders

- INPCTypeProvider

- Tsunami

- RSS Reader

- Start Menu

- NuGetTypeProvider (samples inside Tsunami)

- S3TypeProvider (samples inside Tsunami)

- FacebookTypeProvider (samples inside Tsunami)

- DocumentTypeProvider (samples inside Tsunami)

- FSharp.Data.SqlCommandTypeProvider (NuGet)

- AzureTypeProvider

Open opportunities:

- MongoDB

- SignalR

- European Union Open Data Portal

- HTTP APIs

- SAP

- Solr

- WolframAlpha

- OpenCyc

- MaterialProject

Please let me know if I missed something.

Update 1: Build-in Tsunami type providers were added.

Update 2: SqlCommand and Azure were added.

PowerShell Type Provider

Update (3 February 2014): PowerShell Type Provider merged into FSharp.Management.

Update (3 February 2014): PowerShell Type Provider merged into FSharp.Management.

I am happy to share with you the first version of PowerShell Type Provider. Last days were really hot, but finally the initial version was published.

Lots of different emotions visited me during the work =). Actually, Type Provider API is much harder than I thought. After reading books, it looked easier than it turned out in reality. Type Providers runtime is crafty.

To start you need to download source code and build it – no NuGet package for now. I want to get a portion of feedback and after that publish to the NuGet more consistent version.

Also you need to know that it is developed using PowerShell 3.0 runtime and .NET 4.0/4.5. This means that you can use only PowerShell 3.0 snap-ins.

#r @"C:\WINDOWS\Microsoft.Net\assembly\GAC_MSIL\System.Management.Automation\v4.0_3.0.0.0__31bf3856ad364e35\System.Management.Automation.dll" #r @"C:\WINDOWS\Microsoft.NET\assembly\GAC_MSIL\Microsoft.PowerShell.Commands.Utility\v4.0_3.0.0.0__31bf3856ad364e35\Microsoft.Powershell.Commands.Utility.dll" #r @"d:\GitHub\PowerShellTypeProvider\PowerShellTypeProvider\bin\Debug\PowerShellTypeProvider.dll" type PS = FSharp.PowerShell.PowerShellTypeProvider<PSSnapIns="WDeploySnapin3.0">

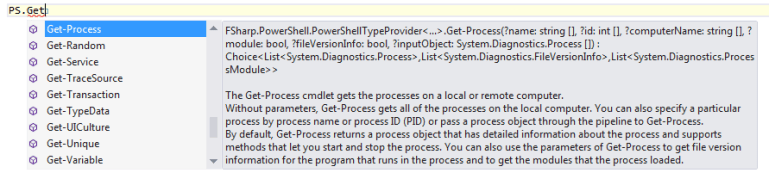

As you see in the sample, PowerShellTypeProvider has a single mandatory static parameter PSSnapIns that contains semicolon-separated list of snap-ins that you want to import into PowerShell. If you want to use only default ones, leave the string empty.

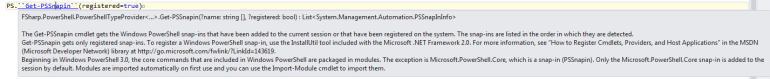

You can find list of snap-ins registered on your machine using Get-PSSnapin method.

You can find list of snap-ins registered on your machine using Get-PSSnapin method.

Enjoy it. I will be happy to hear feadback (as well as comments about type provider source code from TP gurus).