Update #1: The Newsletter

Since 2014, faithfully every Monday, I’ve dispatched the F# Weekly Newsletter to your mailboxes, amounting to nearly 500 editions to date. From the outset, I’ve adopted Tinyletter, a simple platform allowing seamless subscription and delivery of the F# Weekly newsletter.

However, Mailchimp, who took over TinyLetter in 2011, decided to bring it to an end on February 29, 2024. Consequently, I find myself compelled to seek a new home for the newsletter.

Seeing as the F# Weekly newsletter boasts nearly 1000 subscribers—a number exceeding the free tier limit offered by most services—I made the decision to move the newsletter into my WordPress blog, a resource I’ve already invested in, rather than integrating or purchasing a new service. Some of you are already reading this post from within your inbox.

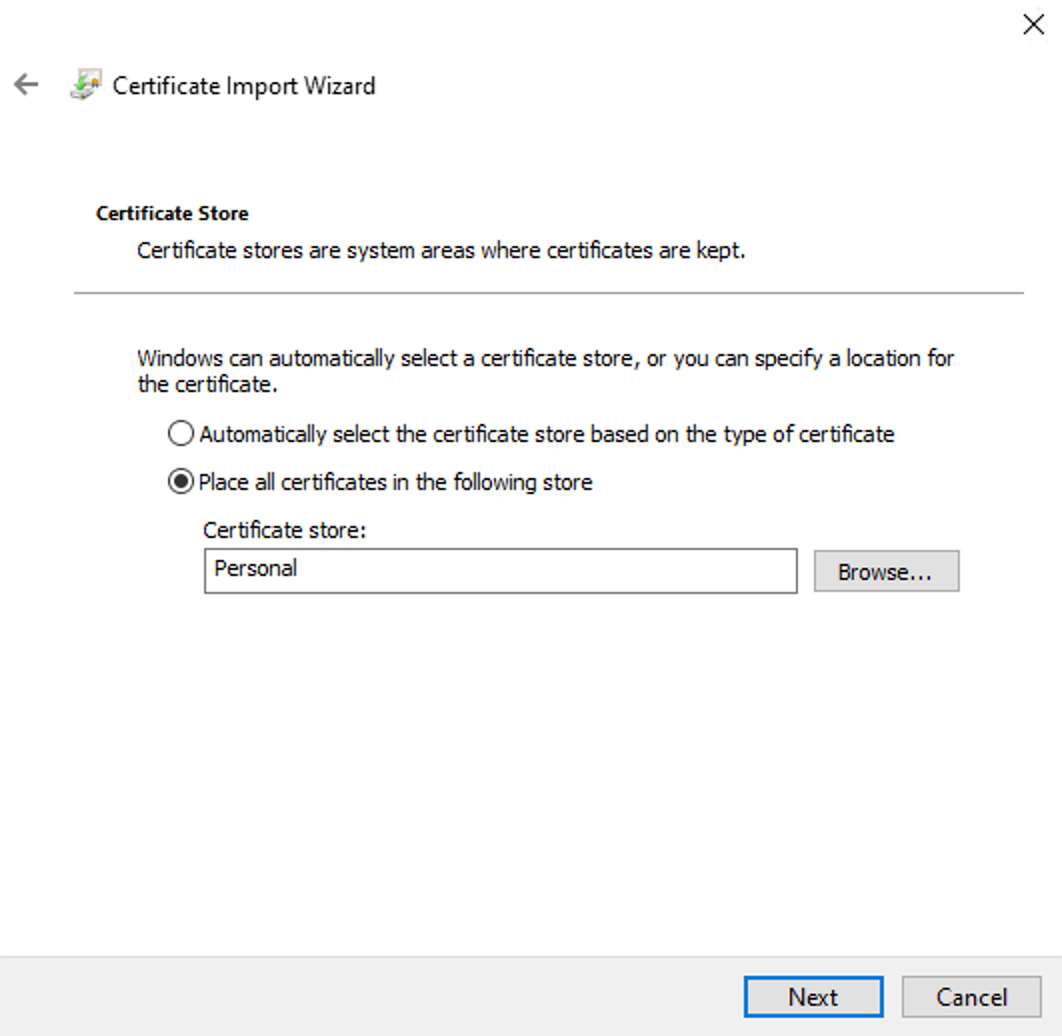

You may wonder, what does this mean to you? It implies that you will also receive email notifications about my occasional blog posts, aside from the F# Weekly newsletters. If this deviates from your initial subscription interest, I deeply apologize. Feel free to modify or cancel your subscription. Gratefully, I’ve managed to configure WordPress to allow you to set your subscription preferences (as seen in the screenshot below). If my “musings” outnumber your tolerance, you can simply select the F# Weekly category 😁

I am immensely grateful to you for joining me on this journey and consuming every piece of F# news I’ve delivered. Still not subscribed? You can do so from this page 😉

Update #2: Twitter/X API Drama

Several moons ago, I put together an automation system that enabled me to gather #fsharp tweets with links, trimming my time spent crafting each F# Weekly down from 3-4 hours to about a single hour. Half a decade ago, I made this tool publicly available, transitioning it to .NET Core and Azure Functions, and deployed it on Azure via my MVP Azure credits.

But as fate would have it, in March 2023, Elon Musk decided to phase out Twitter API v1.1, charging a hefty $100+ monthly fee from enthusiasts who rely on programmatically reading tweets. This abrupt change drove me back to manually penning F# Weekly by hand, a decision that left me heartbroken. 💔

If you’ve noticed a dip in the quality of F# Weekly or found that I’ve overlooked your posts or videos on occasion:

- Make sure to attach the #fsharp tag to your posts if you choose to announce them on X.

- Don’t hesitate to tag me directly or @fsharponline if you’d like more visibility or retweets. If either account retweets your post, it’s almost certain to catch my eye during newsletter assembly.

- If X isn’t your platform of choice, feel free to mention me on Mastodon or send me an email at sergey.tihon[at]gmail.com if you abstain from social media altogether.

If you have any genius ideas on improving the news collection process, making it more resilient, or possibly even rolling it out on a wider scale, I’m all ears! Don’t hesitate to reach out on social media or leave a comment on this post.

Update #3: Warm Gratitude for Donations

In 2021, I set up the buy me a coffee service as a delightful way for you to treat me to a cup of coffee. I want to pour out a tidal wave of THANKS to everyone who kindly donated over the last 3 years! Your generosity warms my heart. ❤️

The donations have sprinkled extra joy into my everyday life, allowing me to occasionally indulge in non-essential buys without the guilt of dipping into family funds. Here are a few examples of how I’ve put your contributions to use (beyond covering my WordPress subscription and domain expenses):

First and foremost, I’ve made a monthly donation to the Ionide project, and I would earnestly implore you to consider the same if you’re able. It’s crucial that we preserve the presence of a free, cross-platform F# IDE.

I’ve been battling with hand discomfort, which sometimes shoots pain back into my wrists and fingers. I regularly find myself alternating mouses and occasionally keyboards. This year, I decided to give myself an early Christmas present—an ergonomic columnar curved keyboard, the MoErgo Glove 80 with Red Pro Linear 35gf switches, and so far, it has won me over. 😊 I’ve swiftly adjusted to a comfortable typing speed that allows me to work without interruptions throughout the day.

Three years ago, I embarked on a journey to balance my screen time by swapping my digital device for the tactile joy of printed books, finding solace in their immersive stories just before bedtime. The therapeutic effect has improved not only my sleep but also my overall well-being. Over time, I’ve amassed a collection of captivating books. For those who share my love for reading, feel free to send me a friend request on Goodreads. I’m always intrigued to know what tales are keeping you hooked!

Recently, I found it impossible to resist spoiling my “big dog” 🐶 with a cozy new kennel for her to curl up in.

Once again, my heartfelt thanks to all of you for your generosity and for investing your time into reading F# Weekly. Here’s wishing you a 2024 brimming with joy and success!

TL;TR

TL;TR