Some weeks ago, Microsoft Research announced NLP toolkit called MSR SPLAT. It is time to play with it and take a look what it can do.

Statistical Parsing and Linguistic Analysis Toolkit is a linguistic analysis toolkit. Its main goal is to allow easy access to the linguistic analysis tools produced by the Natural Language Processing group at Microsoft Research. The tools include both traditional linguistic analysis tools such as part-of-speech taggers and parsers, and more recent developments, such as sentiment analysis (identifying whether a particular of text has positive or negative sentiment towards its focus)

Statistical Parsing and Linguistic Analysis Toolkit is a linguistic analysis toolkit. Its main goal is to allow easy access to the linguistic analysis tools produced by the Natural Language Processing group at Microsoft Research. The tools include both traditional linguistic analysis tools such as part-of-speech taggers and parsers, and more recent developments, such as sentiment analysis (identifying whether a particular of text has positive or negative sentiment towards its focus)

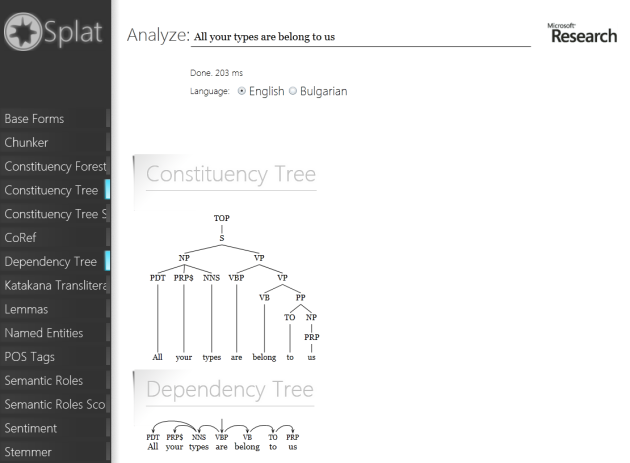

SPLAT has a nice Silverlight DEMO app that lets you try all available functionality.

SPLAT also has WCF and RESTful endpoints, but if you want to use them, you need to request an access key(please email to Pallavi Choudhury). For more details, please read an overview article “MSR SPLAT, a language analysis toolkit“.

Important links:

Test Drive

I have received my GUID with example of using Json service from C# that you can find below.

private static void CallSplatJsonService()

{

var requestStr = String.Format("http://msrsplat.cloudapp.net/SplatServiceJson.svc/Analyzers?language={0}&json=x", "en");

string language = "en";

string input = "I live in Seattle";

string analyzerList = "POS_tags,Tokens";

string appId = "XXXXXXXX-XXXX-XXXX-XXXX-XXXXXXXXXXXX";

string requestAnanlyse = String.Format("http://msrsplat.cloudapp.net/SplatServiceJson.svc/Analyze?language={0}&analyzers={1}&appId={2}&json=x&input={3}",

language, analyzerList, appId, input);

var request = WebRequest.Create(requestAnanlyse);

request.ContentType = "application.json; charset=utf-8";

request.Method = "GET";

string postData = String.Format("/{0}?language={1}&json=x","Analyzers", "en");

using(Stream s = request.GetResponse().GetResponseStream())

{

using(StreamReader sr = new StreamReader(s))

{

var jsonData = sr.ReadToEnd();

Console.WriteLine(jsonData);

}

}

}

In following samples, I used WCF endpoint since WsdlService Type Provider can dramatically simplify access to the service.

#r "FSharp.Data.TypeProviders.dll"

#r "System.ServiceModel.dll"

#r "System.Runtime.Serialization.dll"

open System

open Microsoft.FSharp.Data.TypeProviders

type MSRSPLAT = WsdlService<"http://msrsplat.cloudapp.net/SplatService.svc?wsdl">

let splat = MSRSPLAT.GetBasicHttpBinding_ISplatService()

In the first call we ask the SPLAT to return list of supported languages splat.Languages() and you will see [|”en”; “bg”|] (English and Bulgarian). The mystical Bulgaria… I do not know why, but NLP guys like Bulgaria. There is something special for NLP :).

The next call is splat.Analyzers(“en”) that returns list of all analyzers that are available for English language (All of them are available from DEMO app)

- “Base Forms-LexToDeriv-DerivFormsC#”

- “Chunker-SpecializedChunks-ChunkerC++”

- “Constituency_Forest-PennTreebank3-SplitMerge”

- “Constituency_Tree-PennTreebank3-SplitMerge”

- “Constituency_Tree_Score-Score-SplitMerge”

- “CoRef-PennTreebank3-UsingMentionsAndHeadFinder”

- “Dependency_Tree-PennTreebank3-ConvertFromConstTree”

- “Katakana_Transliterator-Katakana_to_English-Perceptron”

- “Lemmas-LexToLemma-LemmatizerC#”

- “Named_Entities-CONLL-CRF”

- “POS_Tags-PennTreebank3-cmm”

- “Semantic_Roles-PropBank-kristout”

- “Semantic_Roles_Scores-PropBank-kristout”

- “Sentiment-PosNeg-MaxEntClassifier”

- “Stemmer-PorterStemmer-PorterStemmerC#”

- “Tokens-PennTreebank3-regexes”

- “Triples-SimpleTriples-ExtractFromDeptree”

This is a list of full names of analyzers that are available for now. The part of the analyzer’s name that you have to pass to the service to perform corresponding analysis is highlighted in bold. To perform the analysis, you need to have an access guid and pass it as an email to splat.Analyze method. It is probably a typo, but as it is. Let’s call all analyzers on the one of our favorite sentences “All your types are belong to us” and look at the result.

let appId = "XXXXXXXX-XXXX-XXXX-XXXX-XXXXXXXXXXXX"

let analyzers = String.Join(",", splat.Analyzers("en")

|> Array.map (fun s -> s.Split([|'-'|]).[0]))

let text = "All your types are belong to us"

let bag = splat.Analyze("en", analyzers, text, appId)

bag.Analyses

The result is

[["0-all","1-you","2-types","3-are","4-belong","5-to","6-us"]]";

"["[NP All your types] [VP are] [VP belong] [PP to] [NP us] \u000a"]";

"["@@All your types are belong to us\u000d\u000a0\u0009G_DT..."]";

"["(TOP (S (NP (PDT All) (PRP$ your) (NNS types)) (VP (VBP are) (VP (VB belong) (PP (TO to) (NP (PRP us)))))))"]";

"[-2.2476917857427452]";

"[[{"LengthInTokens":3,"Sentence":0,"StartTokenOffset":0}],[{"LengthInTokens":1,"Sentence":0,"StartTokenOffset":1}],[{"LengthInTokens":1,"Sentence":0,"StartTokenOffset":6}]]";

"[[{"Parent":3,"Tag":"PDT","Word":"All"},{"Parent":3,"Tag":"PRP$","Word":"your"},{"Parent":4,"Tag":"NNS","Word":"types"},{"Parent":0,"Tag":"VBP","Word":"are"},{"Parent":4,"Tag":"VB","Word":"belong"},{"Parent":5,"Tag":"TO","Word":"to"},{"Parent":6,"Tag":"PRP","Word":"us"}]]";

"["14.50%: アリオータイプサレベロングタス","13.27%: オールユアタイプサレベロングタス","13.26%: アルユアタイプサレベロングタス","13.26%: アリオールタイプサレベロングタス","11.34%: アリオウルタイプサレベロングタス","7.81%: アルルユアタイプサレベロングタス","7.10%: アリアウータイプサレベロングタス","6.60%: アリアウルタイプサレベロングタス","6.46%: アリーオータイプサレベロングタス","6.40%: アリオータイプサリベロングタス"]";

"[["All","your","type","are","belong","to","us"]]";

"[{"Len":0,"Offset":0,"Tokens":[]}]";

"[["DT","PRP$","NNS","VBP","IN","TO","PRP"]]";

"[["4-4\/belong[A1=0-2\/All_your_types, A1=5-6\/to_us]"]]";

"[[-0.33393750773577313]]";

"{"Classification":"pos","Probability":0.59141720028208355}";

"[["All","your","type","ar","belong","to","us"]]";

"[{"Len":31,"Offset":0,"Tokens":[{"Len":3,"NormalizedToken":"All","Offset":0,"RawToken":"All"},{"Len":4,"NormalizedToken":"your","Offset":4,"RawToken":"your"},{"Len":5,"NormalizedToken":"types","Offset":9,"RawToken":"types"},{"Len":3,"NormalizedToken":"are","Offset":15,"RawToken":"are"},{"Len":6,"NormalizedToken":"belong","Offset":19,"RawToken":"belong"},{"Len":2,"NormalizedToken":"to","Offset":26,"RawToken":"to"},{"Len":2,"NormalizedToken":"us","Offset":29,"RawToken":"us"}]}]";

"[["are_belong_to(types, us)"]]"|]

As you see, service returns result as string[]. All result strings are readable for human eyes and formatted according to “NLP standards”, but some of them are really hard to parse programmatically. FSharp.Data and JSON Type Provider can help with strings that contain correct Json objects.

For example, if you need to use “Sentiment-PosNeg-MaxEntClassifier” analyzer in strongly typed way, then you can do it as follows:

#r @"..\packages\FSharp.Data.1.1.9\lib\net40\FSharp.Data.dll"

open FSharp.Data

type SentimentsProvider = JsonProvider<""" {"Classification":"pos","Probability":0.59141720028208355} """>

let bag2 = splat.Analyze("en", "Sentiment", "I love F#.", appId)

let sentiments = SentimentsProvider.Parse(bag2.Analyses.[0])

printfn "Class:'%s' Probability:'%M'"

(sentiments.Classification) (sentiments.Probability)

For analyzers like “Constituency_Tree-PennTreebank3-SplitMerge” you need to write custom parser that proceses bracket expression (“(TOP (S (NP (PDT All) (PRP$ your) (NNS types)) (VP (VBP are) (VP (VB belong) (PP (TO to) (NP (PRP us)))))))”) and builds a tree for you. If you are lazy to do it yourself (you should be so), you can download SilverlightSplatDemo.xap and decompile source code. All parsers are already implemented there for DEMO app. But this approach is not so easy as it should be.

Summary

MSR SPLAT looks like a really powerful and promising toolkit. I hope that it continues growing.

The only wish is an API improvement. I think there should be possible to use services in a strongly typed way. The easiest way is to add an ability to get all results as Json without any cnf forms and so on. Also it can be achieved by changing WCF service and exposing analysis results in a typed way instead of string[].

Neural networks are very powerful tool and at the same time, it is not easy to use all its power. Now we are one step closer to it from F# and .NET. We will delegate model training to

Neural networks are very powerful tool and at the same time, it is not easy to use all its power. Now we are one step closer to it from F# and .NET. We will delegate model training to

Statistical Parsing and Linguistic Analysis Toolkit is a linguistic analysis toolkit. Its main goal is to allow easy access to the linguistic analysis tools produced by the Natural Language Processing group at Microsoft Research. The tools include both traditional linguistic analysis tools such as part-of-speech taggers and parsers, and more recent developments, such as sentiment analysis (identifying whether a particular of text has positive or negative sentiment towards its focus)

Statistical Parsing and Linguistic Analysis Toolkit is a linguistic analysis toolkit. Its main goal is to allow easy access to the linguistic analysis tools produced by the Natural Language Processing group at Microsoft Research. The tools include both traditional linguistic analysis tools such as part-of-speech taggers and parsers, and more recent developments, such as sentiment analysis (identifying whether a particular of text has positive or negative sentiment towards its focus)